-

Introduction

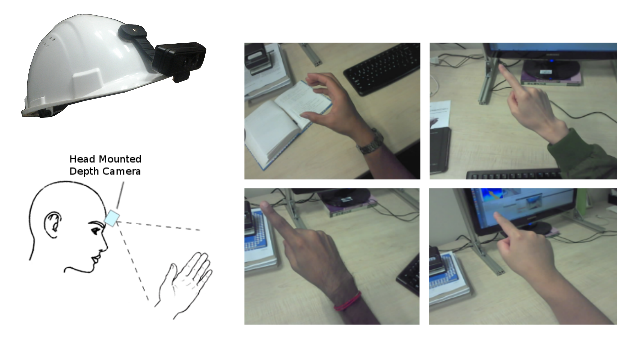

Detecting hand actions from ego-centric depth sequences is a practically challenging problem, owing mostly to the complex and dexterous nature of hand articulations as well as non-stationary camera motion. We address this problem via a Hough transform based approach coupled with a discriminatively learned error-correcting component to tackle the well known issue of incorrect votes from the Hough transform. In this framework, local parts vote collectively for the start and end positions of each action over time. We also construct an in-house annotated dataset of 300 long videos, containing 3,177 single-action subsequences over 16 action classes collected from 26 individuals. Our system is empirically evaluated on this real-life dataset for both the action recognition and detection tasks, and is shown to produce satisfactory results.

-

Videos

-

Dataset

Info

The dateset contains 3 parts:1. Training data

- Number of Subjects : 15

- Number of gestures : 16

- Total number of frames : 112,640

2. Testing data for action recognition

- Number of Subjects : 3

- Number of gestures : 16

- Total number of frames : 22,025

3. Testing data for action detection

- Number of Subjects : 9

- Number of gestures : 16

- Total number of frames : 19,537

Download

1. Download 'HandAction.tar', 'Training data(part1)', and 'Training data(part2)'.2. Extract 'HandAction.tar'. Let $data_path$ denote the path which you extrct the data.

3. Extract 'Training data(part1)' and 'Training data(part2)' into '$data_path$/Train/' folder.

Details

Each sequence contains two parts:

$seq_name$.xml (label file)

$seq_name$/ (a folder contains images)

In each $seq_name$ folder, there exist 3 series of images:

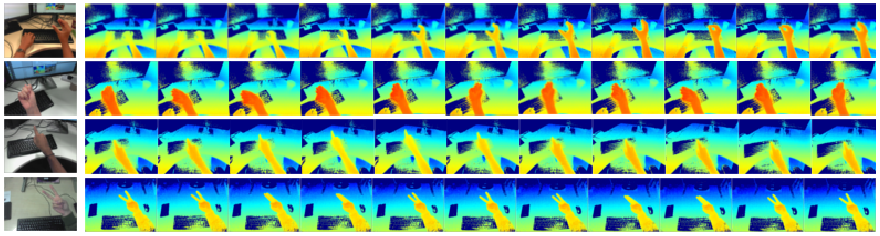

depth_#.png (depth image (16-bit 1-channel))

confi_#.png (IR image (16-bit 1-channel))

color_#.png (rgb image (8-bit 3-channel))

where # denotes the zero base frame index.

-

Publications

| [1] | Chi Xu, Lakshmi Narasimhan Govindarajan, Li Cheng. Hand Action Detection from Ego-centric Depth Sequences. In Arxiv, 2016. |